How to Use Visual Intelligence: Google Lens iPhone Alternative

What to Know

- Visual Intelligence is an Apple Intelligence feature that only works on iPhones with the Camera Control button.

- Comparable to Google Lens, iPhone’s Visual Intelligence lets you look up an image on the internet.

- You can use ChatGPT with Visual Intelligence and have Siri read photographed text out loud.

The best Apple Intelligence iPhone 16 feature is for sure Visual Intelligence! Often compared to Google Lens, iPhone’s version lets you take an image and search for it on Google. It is also possible to ask ChatGPT about the image, use it to summarize or read aloud photographed text, and more. We’ll teach you how to use Apple Visual Intelligence.

Jump To:

- What Is Apple's Visual Intelligence?

- How to Use Visual Intelligence on iPhone

- 6 Ways to Use Visual Intelligence

- FAQ

What Is Apple's Visual Intelligence?

Visual Intelligence is an Apple Intelligence feature that was released with iOS 18.2. Unlike many other Apple Intelligence features, you do need an iPhone 16 model to use Visual Intelligence, because it requires the Camera Control button. Visual Intelligence lets you reverse image search an image like you would using Google Lens. However, Visual Intelligence has extra features, like asking ChatGPT a question, having Siri read photographed text out loud, or generating a written summary. If you take an image of the storefront of a business, Visual Intelligence may be able to give you information about it, such as the phone number, email, opening hours, and so much more! Make sure to double-check which model of iPhone you have and what version of iOS is running to confirm compatibility before trying the steps below.

How to Use Visual Intelligence on iPhone

The best Apple Intelligence iPhone 16 feature is definitely Visual Intelligence, because there is so much you can do with it! Here’s how you can use Visual Intelligence on your iPhone once you've enabled Apple Intelligence:

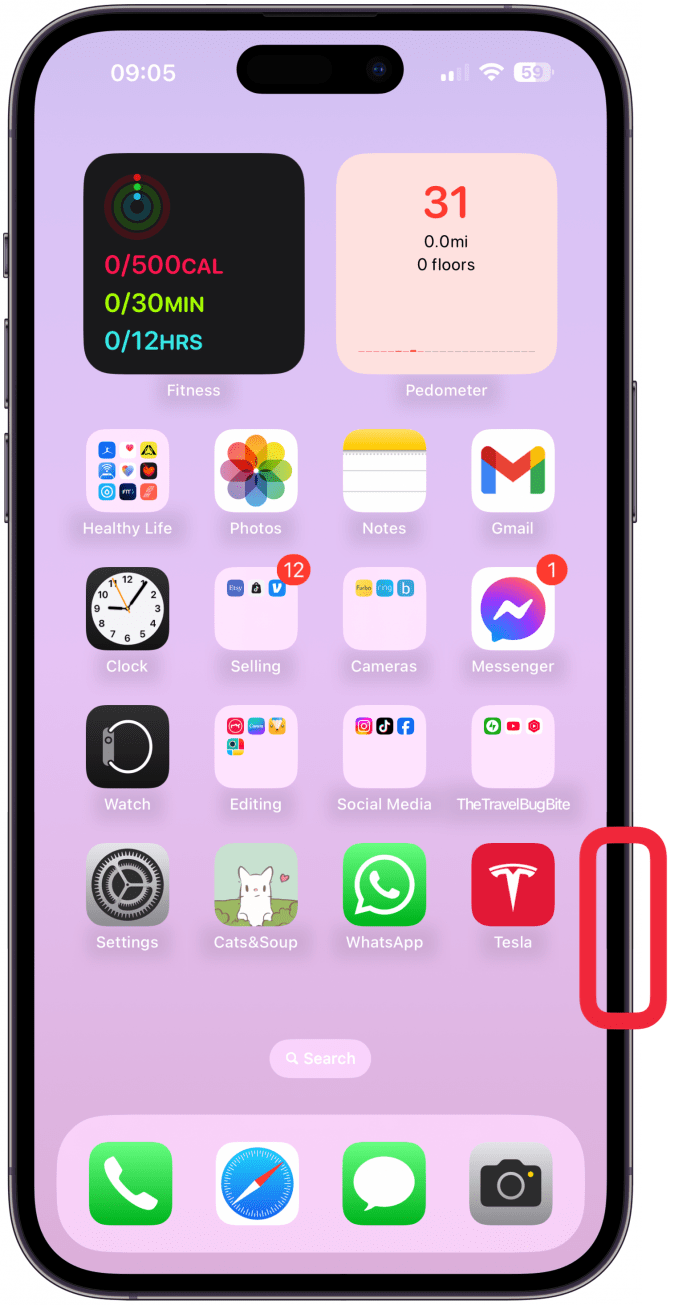

- While holding your iPhone vertically, not horizontally, press and hold the Camera Control button until the Visual Intelligence view shows up.

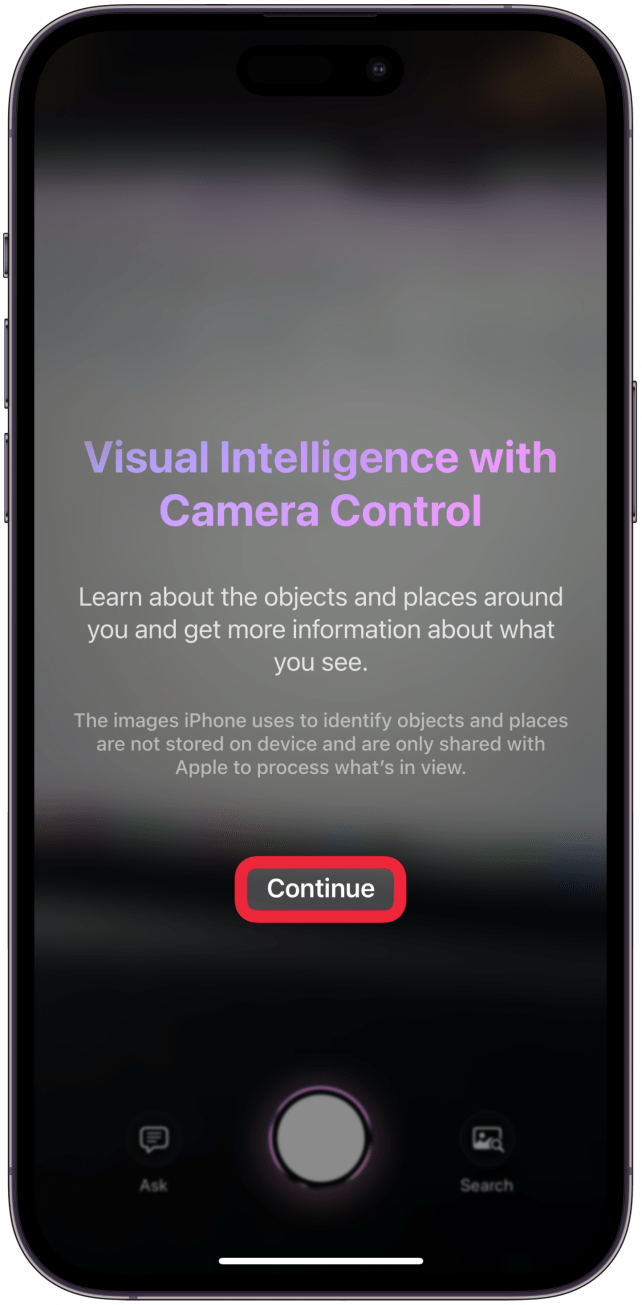

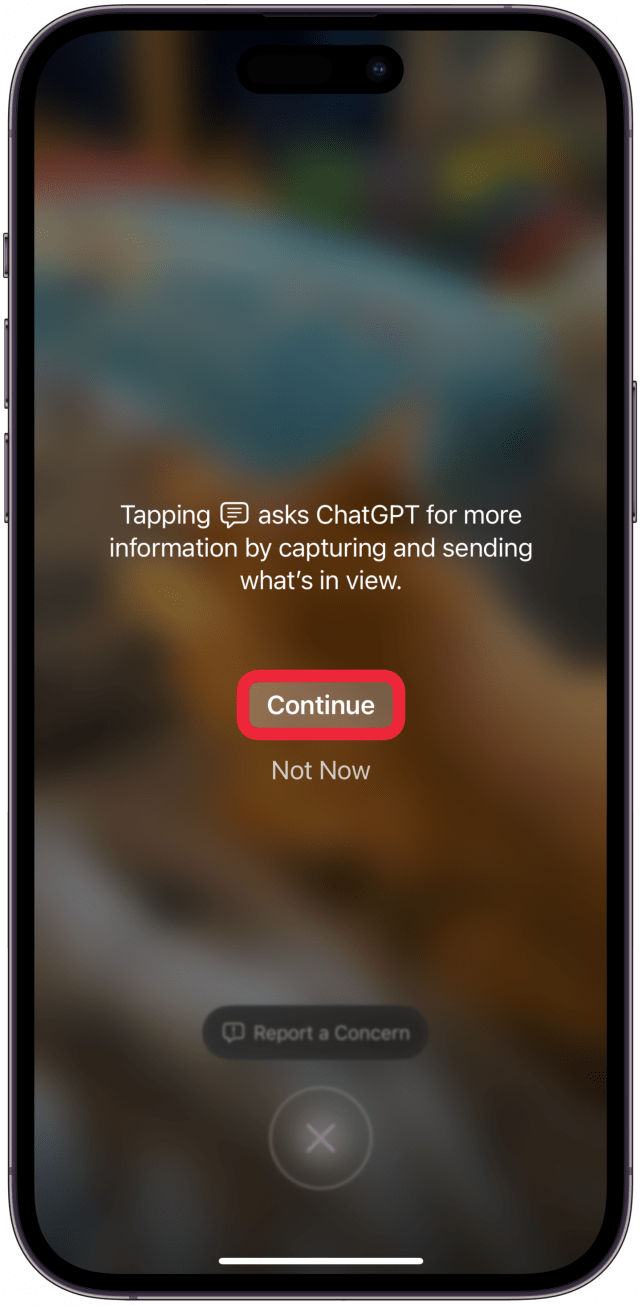

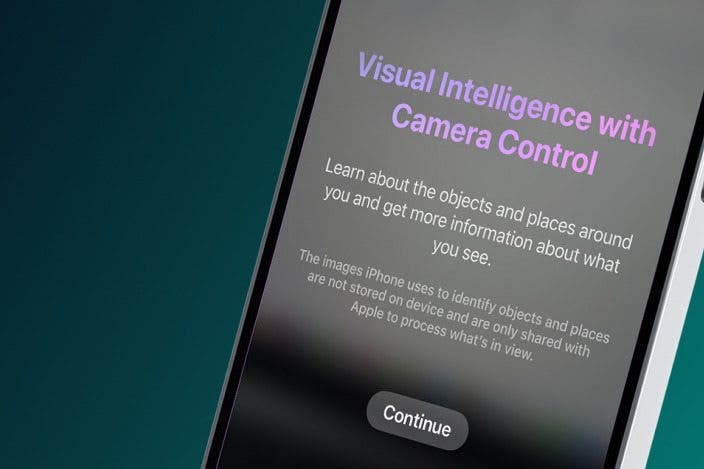

- The first time you do this, you will get an informational pop-up on your screen. Tap Continue once you’ve read everything.

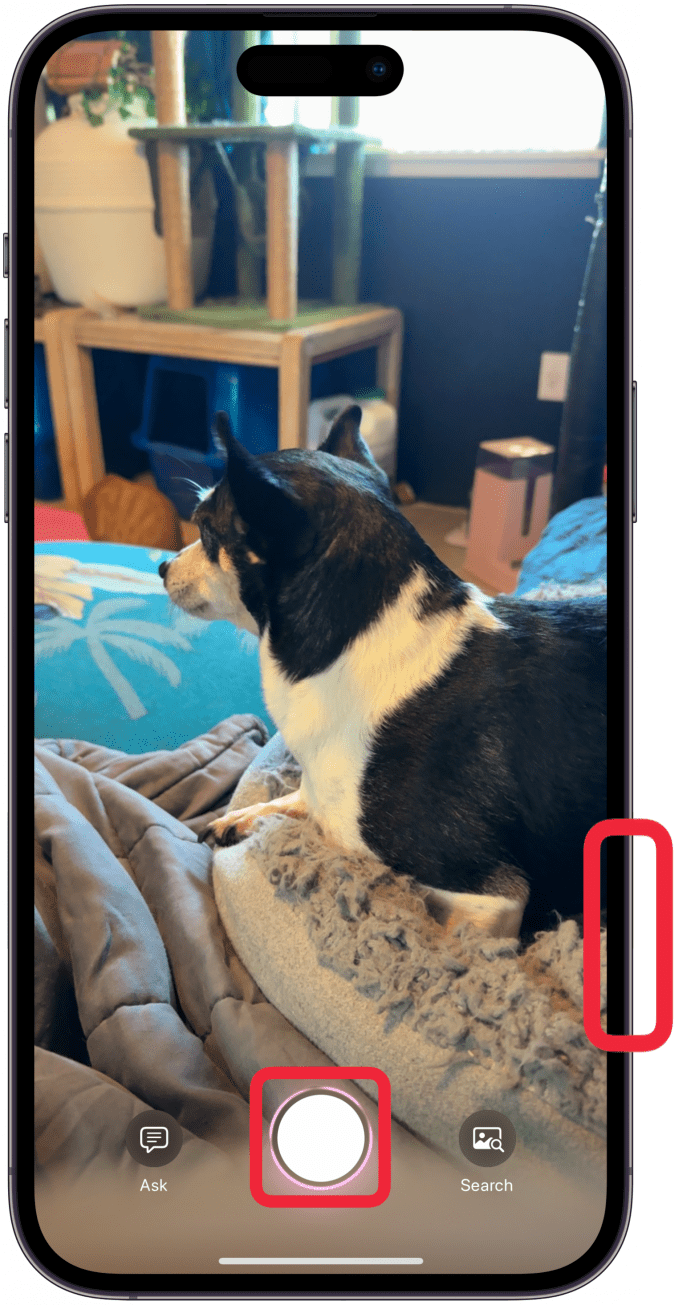

- Tap the shutter button on the screen or push the Camera Control button to take a photo, which will let you see all the Visual Intelligence options. If you tap on Search or Ask before taking a photo, your phone will use the image on the screen to search Google or research what's in front of the camera with ChatGPT.

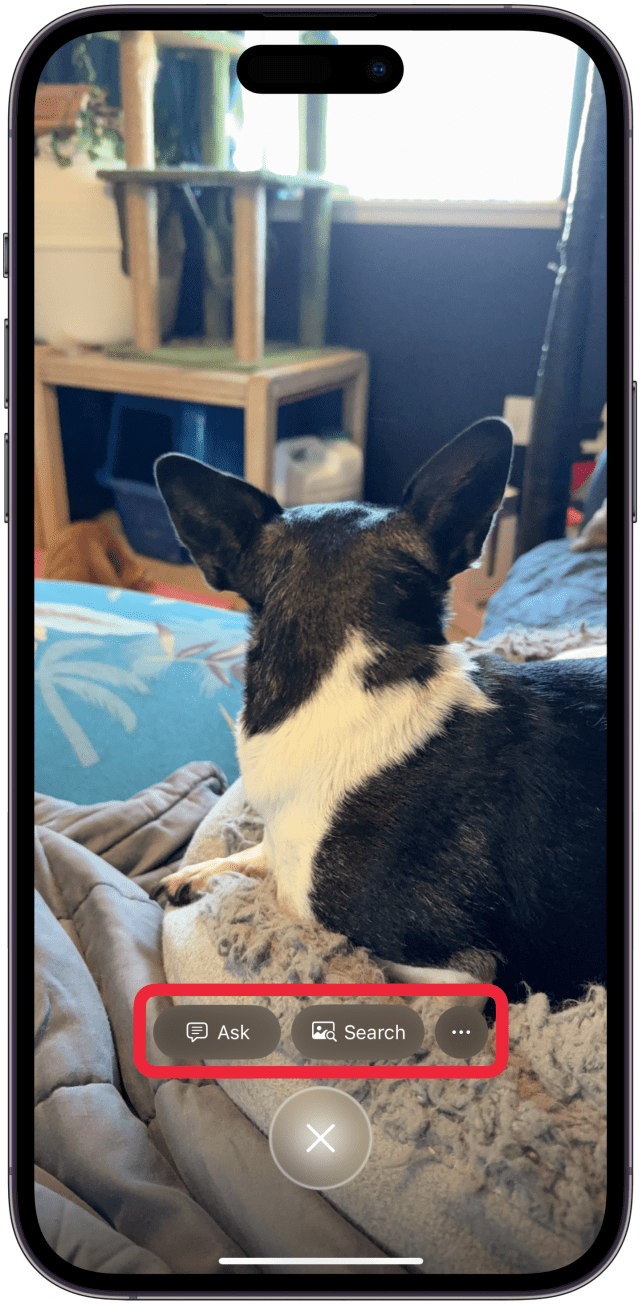

- Depending on what you photograph, you’ll see some different options.

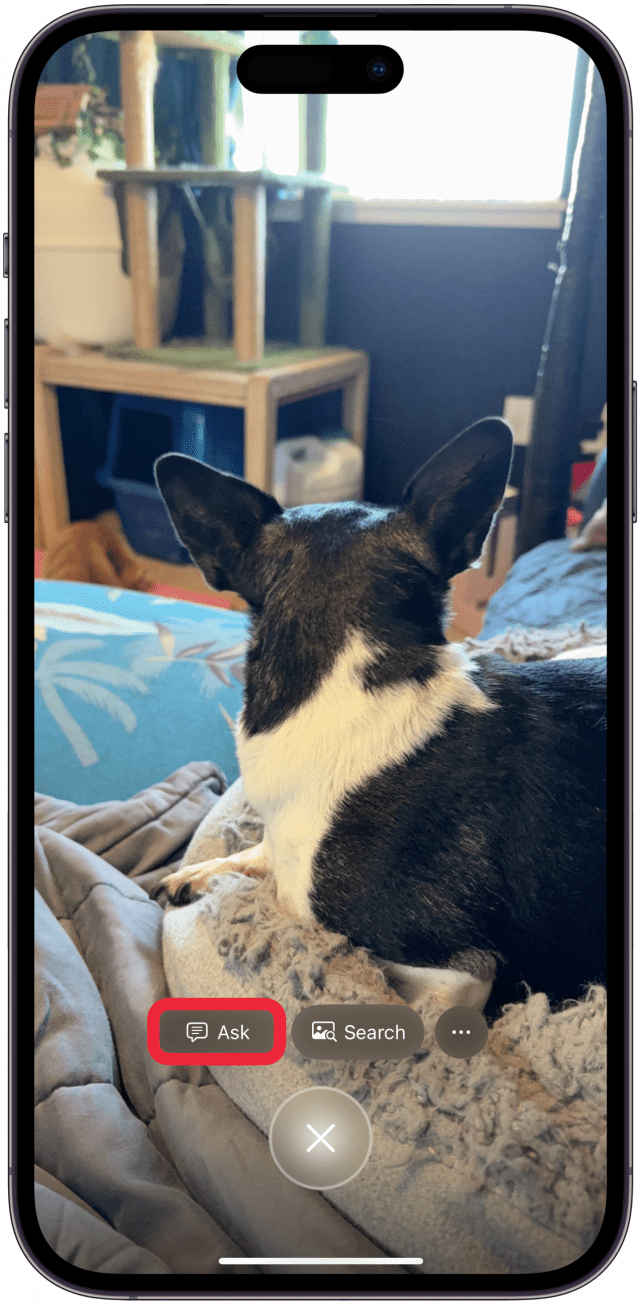

- Tap Ask to use ChatGPT to research the image.

- The first time you use this feature, you will get a pop-up with information to help you understand how it works. Tap Continue.

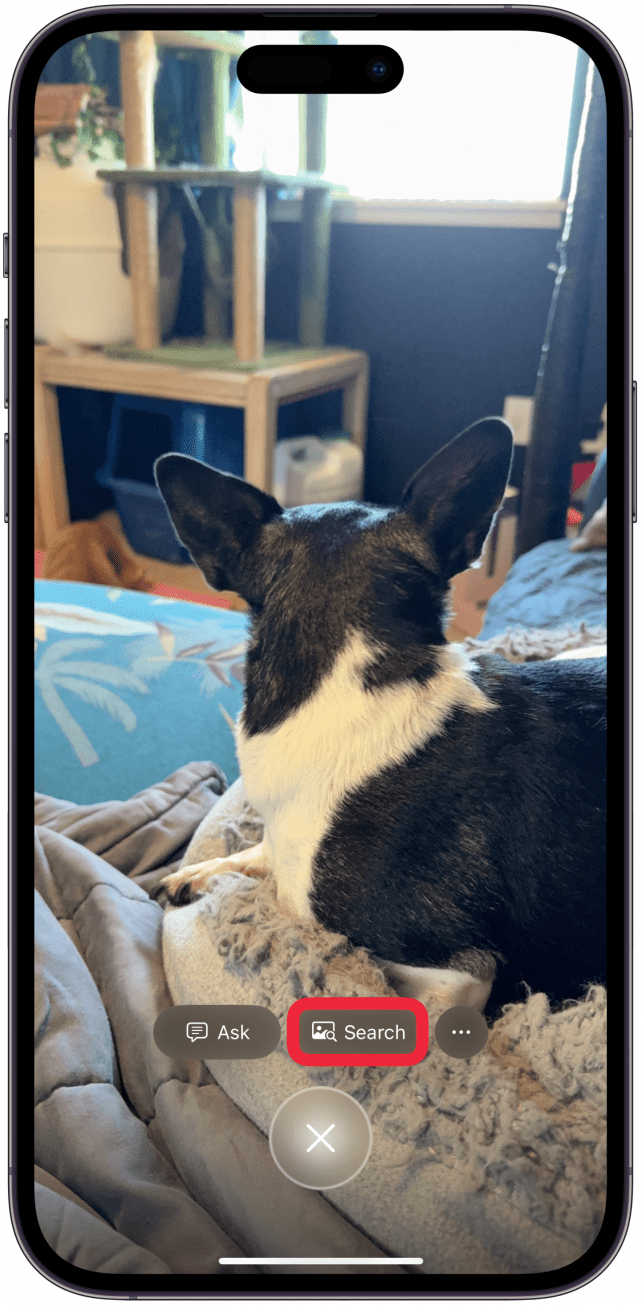

- Tap Search to search for it in Google.

- The first time you use this feature, you will get a pop-up with information to help you understand how it works. Tap Continue.

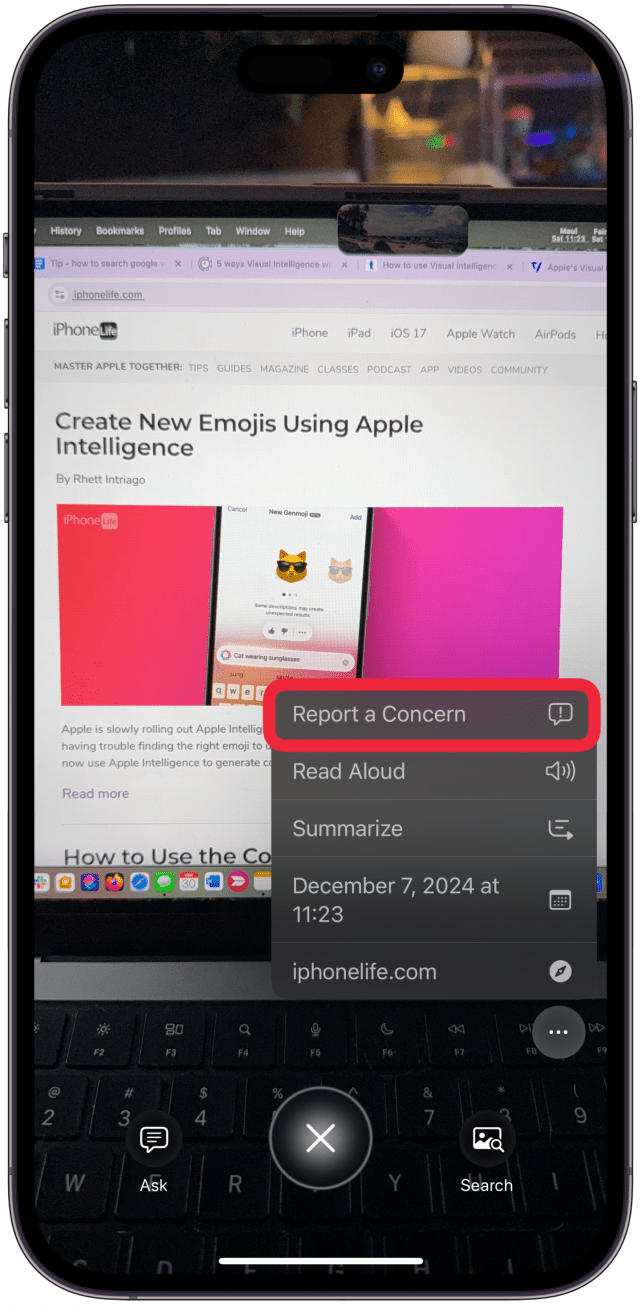

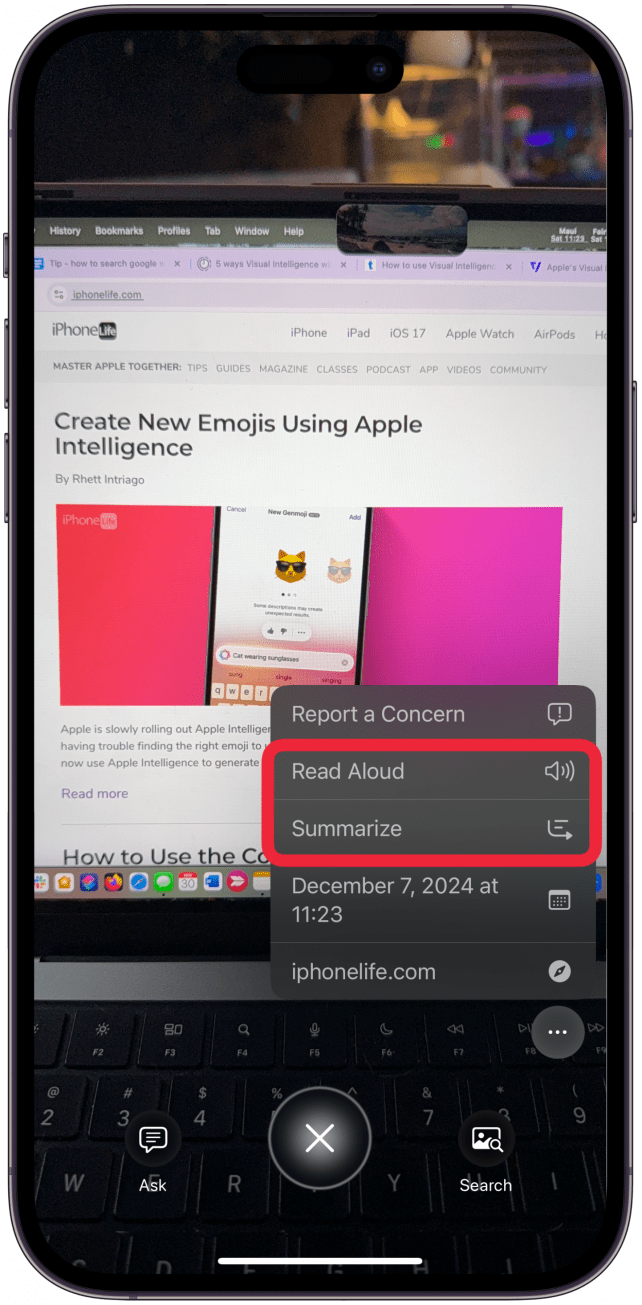

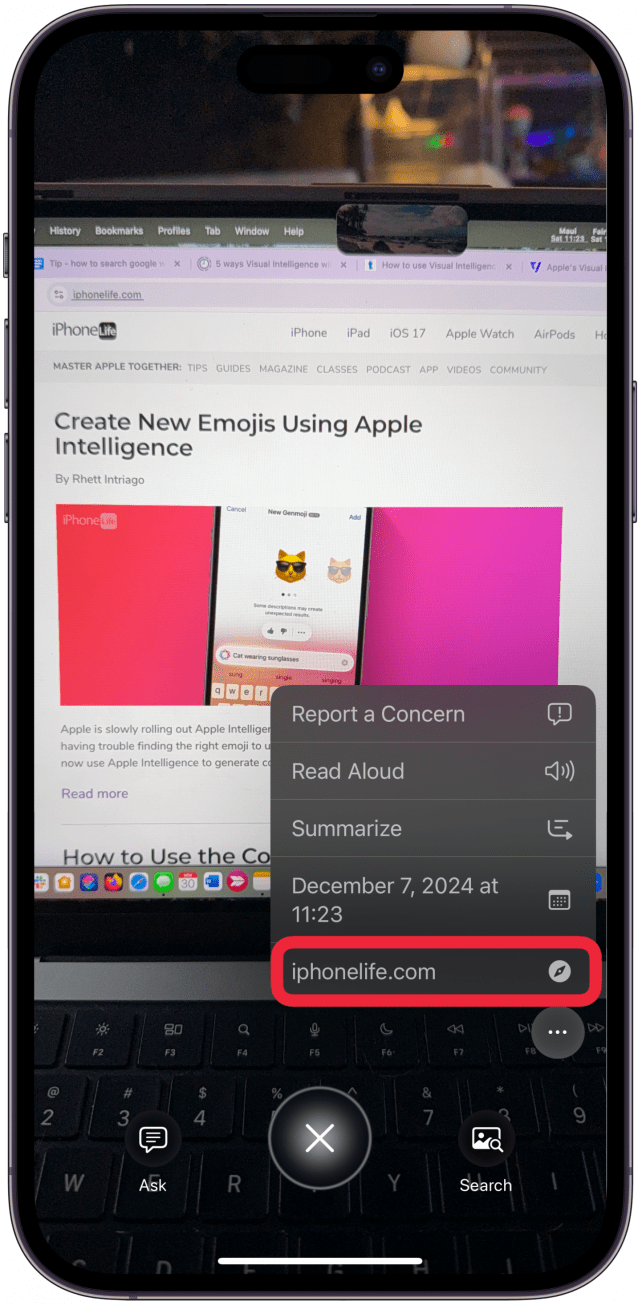

- Sometimes there will be a More button (three dots). Tap it.

- If there is something concerning about how Virtual Intelligence processes the image, tap Report a Concern - Apple takes these seriously to make improvements to their software.

- If the image contains text, you’ll be able to select Read Aloud to have Siri read the photographed text to you, or you can tap Summarize, to get a written summary. Read Aloud and Summarize will only work if you take an image of a complete text; if you photograph a section that cuts off sentences, these options will not appear.

- If the image contains a date, you will see the option to add it as an event directly in your Calendar app.

- If there is a URL in the image, it will be identified, and you can tap on it to go straight to the website.

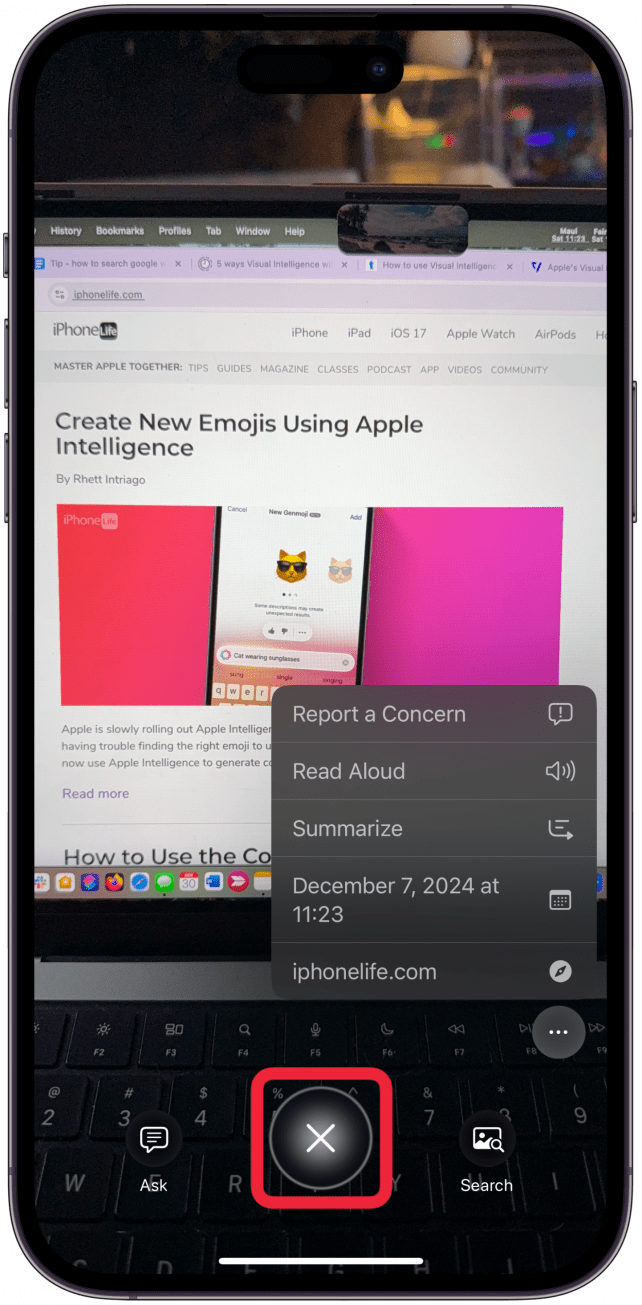

- Tap the X icon over the shutter button to return to be able to take another image.

Now you know how to use Visual Intelligence on iPhone 16 models. For more Apple Intelligence tips, don’t forget to check out our free Tip of the Day newsletter!

6 Ways to Use Visual Intelligence

Visual Intelligence can do a lot. Below are some practical ways to use it in your daily life.

1. Reverse Search an Image via Google

Searching for an image on Google is a great way of identifying what product you are looking at. For things or furniture, this can even help you find an online store to make a purchase of that exact item or something similar. A photo of a meal can even lead you to find the recipe. However, it has been possible to discover recipes via the Photos app even without Apple Intelligence.

2. Get the Contact Information for a Business

While this won't work for every single business out there, Visual Intelligence can help you get information about a business by taking a photo of the storefront. Sometimes, this might be a phone number, email, or website. However, not every business will be recognized.

3. Identify an Animal or Plant

Although you cannot guarantee a correct identification, taking a photo of an animal or plant using Visual Intelligence will let your iPhone guess the breed/type. While this is exciting, it has been possible in the Photos app even without Apple Intelligence, but we can hopefully expect this to work even better than before.

4. Solve Math Equations Using ChatGPT

Take a photo of an equation using Visual Intelligence, and ChatGPT can solve it for you! Make sure the equation is written and photographed clearly, and it is always best to double-check the answer by solving it yourself. Artificial Intelligence is not perfect and can make mistakes.

5. Get a Written Summary of Text

If you take a photo of text in a book, on a museum placard, menu, website, etc, Visual Intelligence can summarize it for you. This happens in a written paragraph that you can copy and paste into a Note or elsewhere on your iPhone.

6. Have Siri Read Text Out Loud

Whatever text you photograph can also be read aloud using Siri. Don’t forget that you can customize Siri’s voice based on your preferences so that everything that’s read out loud sounds exactly like you want it to.

Now you know how to use Visual Intelligence! I hope you agree with me that this is one of the best Apple Intelligence features because it is so versatile and has many practical uses. Next, learn how to create new emojis with Apple Intelligence.

FAQ

- How to turn on Apple Intelligence? Before you can use any Apple Intelligence feature, including Visual Intelligence, you have to join the AI waitlist in your iPhone settings.

- How to use Google Lens on iPhones? You can use the Google Lens iPhone feature via the Chrome app and do reverse image searches with it. This is a good option if your iPhone isn’t compatible with Visual Intelligence.

- Does iPhone 16 Pro AI alter photos? Yes, technically, all iPhones have automatic filters on their camera lenses to improve a photo once you take it. To avoid this, you can use RAW mode. iPhone 16 models and iPhone 15 Pro and Pro Max do have extra Apple Intelligence features, like the Clean Up tool.

Olena Kagui

Olena Kagui is a Feature Writer at iPhone Life. In the last 10 years, she has been published in dozens of publications internationally and won an excellence award. Since joining iPhone Life in 2020, she has written how-to articles as well as complex guides about Apple products, software, and apps. Olena grew up using Macs and exploring all the latest tech. Her Maui home is the epitome of an Apple ecosystem, full of compatible smart gear to boot. Olena’s favorite device is the Apple Watch Ultra because it can survive all her adventures and travels, and even her furbabies.

Rachel Needell

Rachel Needell

Rhett Intriago

Rhett Intriago

Hal Goldstein

Hal Goldstein

Olena Kagui

Olena Kagui

Susan Misuraca

Susan Misuraca

Leanne Hays

Leanne Hays

Kenya Smith

Kenya Smith

Amy Spitzfaden Both

Amy Spitzfaden Both