Hands On with Apple Intelligence

Apple Intelligence has been available for a few months now, but is it everything Apple has promised? Is it worth the hype to have AI-powered features on the iPhone? I tested out all the currently available Apple Intelligence features and here’s what I found.

Who Can Use Apple Intelligence?

If you have an iPhone 15 Pro, 15 Pro Max, or any of the iPhone 16 line, your device is ready to use Apple Intelligence. To get started, you’ll want to head over to the Settings app and select Apple Intelligence & Siri. Toggle on Apple Intelligence to enable it on your iPhone. You can come back and toggle it off if you change your mind. You may be placed on a wait list; you’ll get a notification when your device is ready to use its new AI-powered functions. So, what can your iPhone do once you have Apple Intelligence?

Same Old Siri

The first thing you’ll notice when using Apple Intelligence is that Siri has a new user interface—instead of an iridescent bubble at the bottom of the screen, a glow will surround the border of your screen. At time of writing, that’s where the changes end. Siri’s new skills are not available yet, though are expected to drop sometime this spring with iOS 18.4. When asking Siri questions and making requests, you’ll get the same answers as on iOS 17 and non-AI-enabled devices. Currently, Siri’s only new features are the improved UI and Siri’s ability to understand your request even if you stutter or correct yourself while speaking. For example, you can say something like, “Set a timer for five minutes—no, ten minutes,” and Siri will automatically know to set the timer for ten minutes instead of five.

After ads for the iPhone 16 started coming out showing people using an AI-powered Siri, my dad came to me and told me he was thinking about upgrading his phone. I had to explain to him that even if he upgraded to the newest iPhone, he still wouldn’t be able to use all the advertised features. While I am excited to see Apple Intelligence in action, it’s extremely disappointing that one of Apple’s most heavily advertised features is not even available yet.

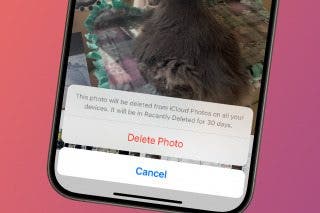

Clean Up the Backgrounds of Your Photos

My favorite Apple Intelligence feature is the Clean Up tool in the Photos app. When you open the editing screen in the Photos app, you’ll see an option for Clean Up. When you select this option, Apple Intelligence will analyze the photo and offer to remove people or distracting objects from the background of photos. If AI doesn’t detect any people, you can also manually circle or scribble over the person and it will remove them. This feature is probably my most-used Apple Intelligence tool. It’s so nice to be able to remove people who end up in the background of photos.

Besides Clean Up, the Photos app also now has a smarter search function and you can also use AI to create Memory Movies by simply describing what kind of photos you want to see and the music you want to hear.

Punch Up Your Writing

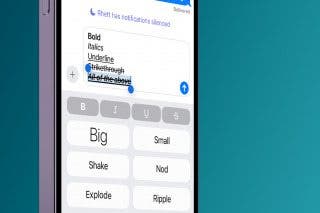

This neat update is actually multiple features wrapped up in one. Writing Tools can be used to rewrite, summarize, and proofread text, as well as convert text to bullet lists, tables, and key points. Plus, you can easily change the tone of your writing between Friendly, Professional, and Concise. In my experience, Writing Tools work surprisingly well. In my testing, Apple Intelligence is very good at rewriting text and checking for spelling and grammatical mistakes. The summarizing features also work well and can easily distill information into shorter paragraphs, bullet points, or tables. The tone changing is more hit or miss. It feels more like the AI just used a thesaurus to change a few words, rather than reworking the text itself.

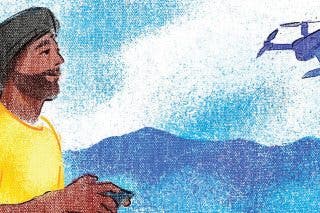

Playing Around with the Image Playground

The next big Apple Intelligence feature is Image Playground. Image Playground uses AI to generate artwork and imagery. Thankfully, Image Playground is very limited in the types of images it can generate, which should help prevent misuse. For example, it is unable to produce photo-realistic images, so if you ask it to generate an image of a friend, it will look more exaggerated and cartoonish than lifelike. It’s also limited to two distinct art styles: Animation, which has a Pixar-esque appearance, and Illustration, which looks more like a colored pencil drawing. In my testing, the images it creates almost always accurately match the description I provide.

Outside of Image Playground, you can also create entirely new emojis. Anywhere you can enter text, just tap the emoji icon. Next to the search bar, you’ll see an emoji with a plus on it. Tap that, and you can describe any emoji you want and Apple Intelligence will do its best to create it for you.

Smarter Predictions?

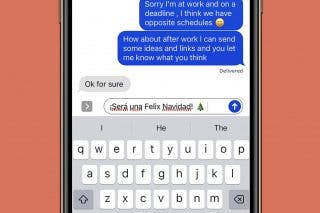

Text predictions can sometimes be useful when you’re having trouble finding the right words for a message. Now, when you receive a text, Apple Intelligence will make suggestions about how you should respond. To accept the suggestion, just tap the space bar. These can be pretty hit or miss. I usually opt to type my own responses, mostly because AI will capture the essence of what I want to say but the wording is not quite right. It’s a welcome feature since it does occasionally offer a good jumping-off point for how I want to respond to a message. Overall though, this is probably one of my least-used Apple Intelligence features. To toggle this feature on or off, go to Settings > General > Keyboard > Show Predictions Inline.

Summarize All the Things

Next up are notification and email summaries. Apple Intelligence is able to analyze your notifications and provide summaries based on the contents of the notifications. This can be useful for determining which notifications are the most important, as well as quickly gathering what a notification is telling you without opening it. However, this can lead to some humorous misunderstandings. For the most part, Apple Intelligence seems to accurately summarize text messages, but on occasion, I’ll get a summary that doesn’t match the actual message at all. It can be funny, and luckily, it’s easy to simply open the message or preview it by touching and holding the message.

A downside to Notification Summaries is that it causes a short delay in notifications. I started to notice that I would get text notifications on my MacBook about 10 seconds before they would appear on my iPhone. I realized this was because Apple Intelligence was taking an extra few seconds to analyze the message and provide a summary before delivering the notification.

Notification summaries are enabled by default when you turn on Apple Intelligence, but if you want to confirm you have them enabled (or if you want to disable them), just go into the Settings app, tap Notifications, and select Summarize Notifications. You can control notification summaries for individual apps or enable/disable them altogether.

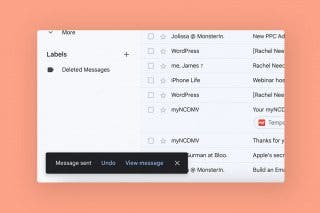

Summaries also work in the Mail app, beyond just the notifications. When viewing your Primary inbox in the Mail app, you’ll see summaries for your emails. This works well and does a good job of providing you with the most important information at a glance. If you want to make sure you have this feature enabled, you can go into Settings, scroll down to Apps, and find the Mail app in the list. You can use the Summarize Messages Previews toggle to enable or disable this AI-powered feature specifically for Mail.

Native ChatGPT Integration

One of the biggest changes is how ChatGPT has been integrated with iOS. Using ChatGPT is completely optional. To enable it, you’ll have to go into Settings, select Apple Intelligence & Siri, and scroll down to ChatGPT, under Extensions. You don’t need a ChatGPT account to use it, but you can sign in if you want to keep a record of your requests. When ChatGPT is enabled, Siri will always ask you first if you want to use ChatGPT to fulfill more complex requests (unless you turn off the Confirm ChatGPT Requests toggle in Settings).

Despite Siri not yet being smarter, ChatGPT at least allows us to make more complex requests from our virtual assistant. Unfortunately, it’s not quite as seamless as I would like. I’m hopeful that future updates will improve both Siri and ChatGPT integration.

Get Right to the Point

Safari now has an option to summarize web pages using Apple Intelligence. Simply tap the Page Menu button in the address bar, then tap Show Reader. Upon entering Reader View, there will be a Summarize button at the top of the page. Tap the button to see a quick single paragraph summary of the page. This can be handy for lengthy news articles or recipe pages. Sometimes, you’ll even get a table of contents, which you can use to quickly navigate the page. Just tap a heading, and you’ll be pulled out of Reader View and taken to that section of the page.

Stay Focused & on Task

Another new Apple Intelligence feature is the Reduce Interruptions Focus mode. This Focus works by analyzing your notifications as they come through and intelligently deciding which ones you need to see now, and which can wait. This can help you stay focused by preventing you from getting distracted by messages and other notifications.

Is Apple Intelligence Worth It?

In my opinion, I don’t see a whole lot of use for Apple Intelligence in its current state. Even though I do a lot of writing for work, I’m not inclined to use AI to rewrite or change the tone of my writing. Text predictions rarely match exactly what I want to say, using Image Playground to generate art feels wrong, and ChatGPT integration is still not a very smooth experience. Using Clean Up in the Photos app seems like the only fully fleshed-out AI feature.

I’m still hopeful that Apple will be able to make meaningful improvements to Apple Intelligence in future updates. I also hope that the new Apple Intelligence-powered Siri will be worth the wait and allow me to talk to Siri more naturally instead of having to carefully choose my words to ensure the virtual assistant understands what I’m trying to accomplish. I eagerly await the arrival of iOS 18.4 when we should finally have access to this new Siri.

Rhett Intriago

Rhett Intriago is a Feature Writer at iPhone Life, offering his expertise in all things iPhone, Apple Watch, and AirPods. He enjoys writing on topics related to maintaining privacy in a digital world, as well as iPhone security. He’s been a tech enthusiast all his life, with experiences ranging from jailbreaking his iPhone to building his own gaming PC.

Despite his disdain for the beach, Rhett is based in Florida. In his free time, he enjoys playing the latest games, spoiling his cats, or discovering new places with his wife, Kyla.

Amy Spitzfaden Both

Amy Spitzfaden Both

Rachel Needell

Rachel Needell

Hal Goldstein

Hal Goldstein

Rhett Intriago

Rhett Intriago

Leanne Hays

Leanne Hays

Olena Kagui

Olena Kagui

Susan Misuraca

Susan Misuraca